Right now, there are a variety of shortcuts and tricks that can increase your B2B company’s visibility in ChatGPT, Google AI Overviews, Perplexity and other LLMs. But these are likely to be short lived. The LLMs will weed them out – just like Google did with early SEO hacks and black hat techniques.

That’s one of the main learnings I took from a recent webinar with Malte Landwehr, CPO and CMO of AI search analytics platform, Peec AI. The session “Hidden Playbooks: How B2B Saas Companies Dominate LLM Results” was hosted by Exposure Ninja.

Tricks like publishing self-referencing listicles that promote your own brand as “The best X, Y or Z software” are showing up regularly in AI answers right now. But they’ll soon be driven out by the LLMs according to Malte.

Longer term, if you want to maintain visibility you’ll need to be mentioned by authoritative sources, develop content that demonstrates genuine expertise or be talked about favourably by real people on social media, community and review sites.

Which is why (thankfully for those working in public relations) PR and community management are going to remain important, sustainable ways of building GEO/AIO /AI search/LLM search visibility.

How LLMs decide decide how to answer your question?

You probably know that one way LLMs respond to prompts or queries is to take information directly from their training data. The other way is using ‘grounding’ to search the web for up to date content they can use in answers. They synthesise content/chunks of text from web pages. And they also include links — i.e. citations — to the web pages or sources (although the links are not always clicked on).

One key piece of advice from Malte: if you can make your website become a source (website being cited) through the grounding process, you can greatly influence the answers (and get your own brand mentioned).

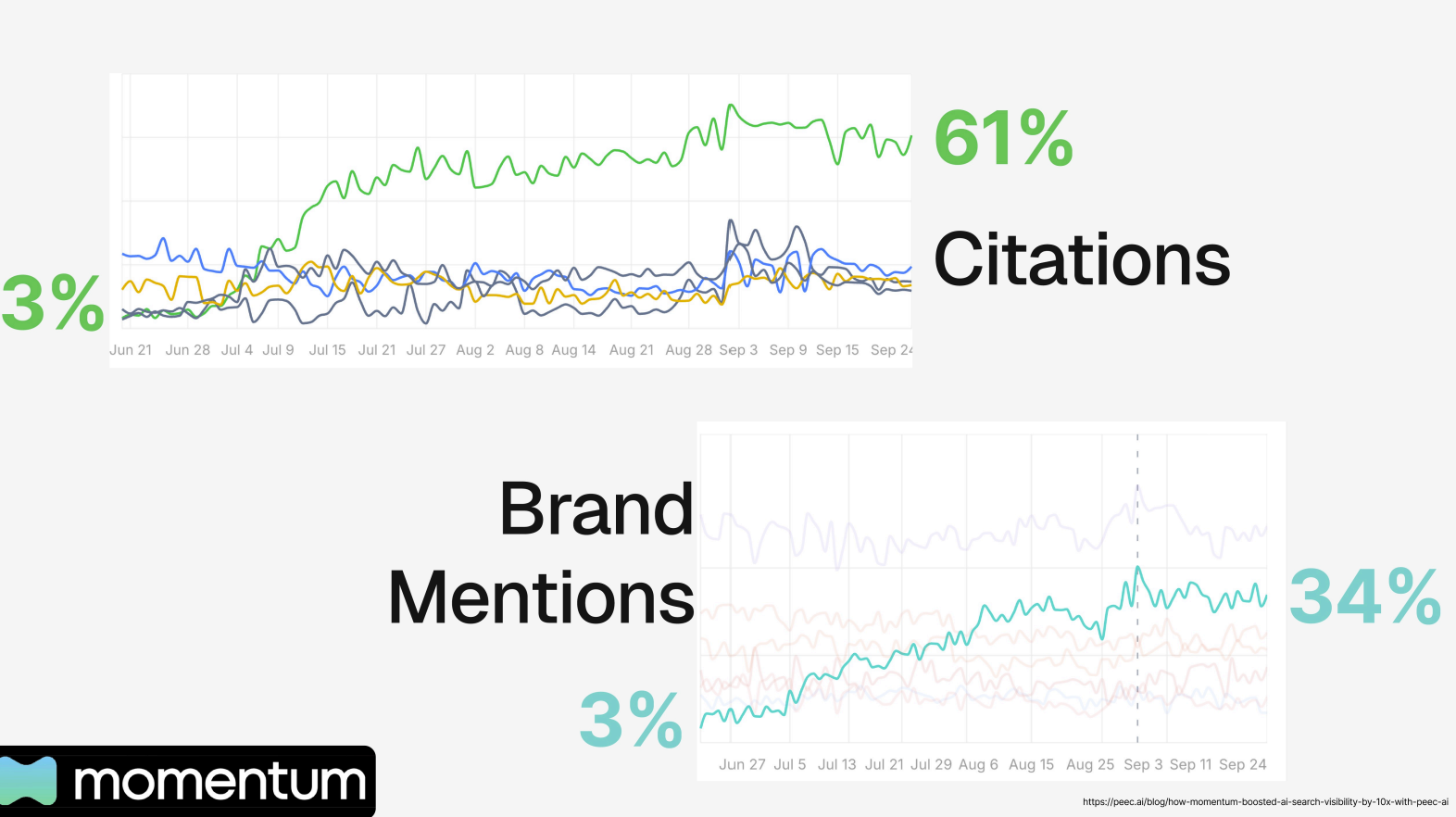

For example, Peec AI noticed that a client it was helping was the source for only 3% of the prompts it was monitoring. It began publishing expert content over a couple weeks and this went up to 61%. Its brand mentions also went from 3% to 34% (so 11x). Why? Because it was cited as a source and mentioned its own brand in pages on its own website.

How to structure your web content so LLMs are more likely to use It

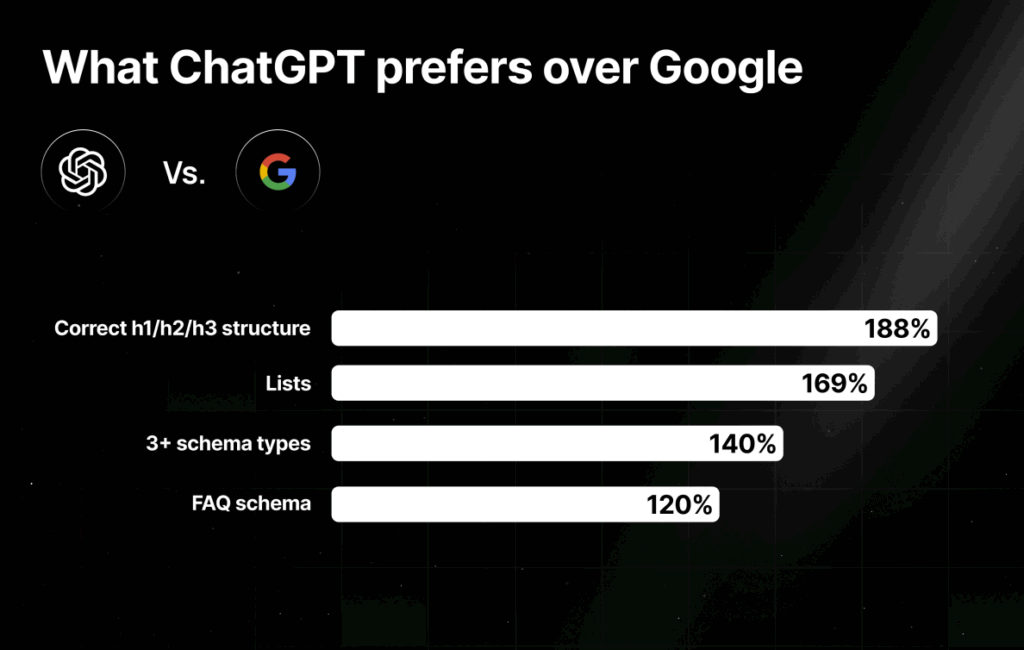

Malte provided tips on how companies can increase the chances of their on-site content being used as a source within LLM answers. One thing that seems to help is getting the structure of your content right. So documents that get cited by ChatGPT tend to:

- Use correct H1 and H2 headings

- Use more lists

- Use schema — specifically FAQ schema

(Malte noted that this is based on correlation studies, not causation. So we might one day find that some of these don’t matter at all).

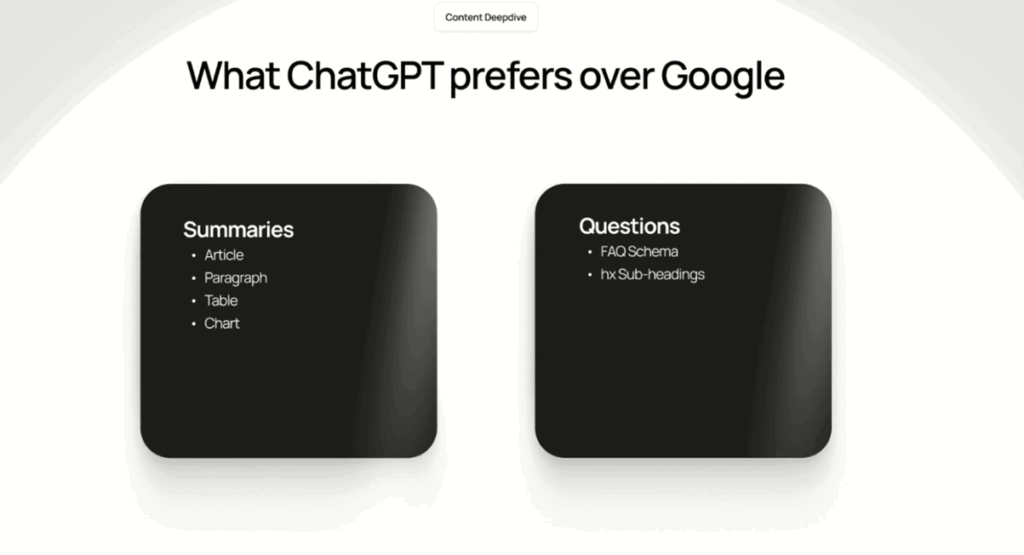

Two other things that work well to make content more accessible to LLM — and that Malte has tested himself — are summaries and questions.

- Summaries: LLMs take key text in from documents which they synthesise to show AI answers. And creating summaries forces us to write very specifically and in a condensed way, which is what the LLMs prefer. You can provide a summary for a page or for a long paragraph and even to explain what the key findings shown in tables and charts to increase the chances of LLMs using your content.

- Questions: Often LLMs use fan-out queries — they take what a user has asked and create a list of related questions the model uses to identify relevant content. So if you include questions within your content, there’s a chance it may be an exact match for one of these questions. Use questions in your subheads or use FAQ schema. For example, you can add FAQ schema to the end of your article.

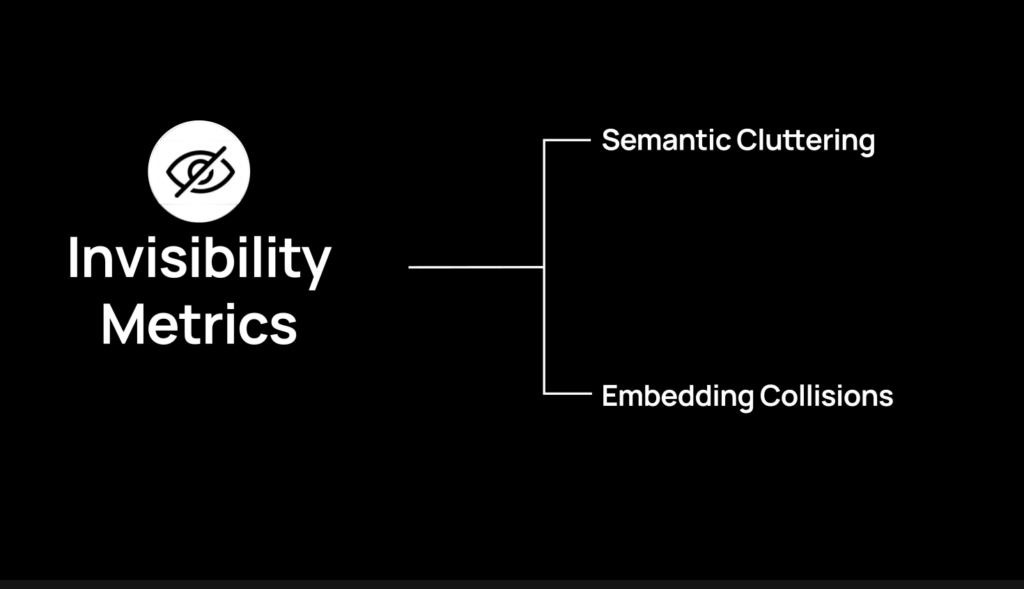

And below are two tactics that will stop your content being picked up in AI answers – avoid doing these suggests Malte:

- Semantic Cluttering: Avoid ‘semantic cluttering’ i.e. long, meandering articles that cover many seemingly unrelated topics (like a recipe blogger who describes their holiday before giving the actual recipe). It’s fine to write long content, but LLMs are more likely to use content that is clearly focused on a specific topic or entity.

- Embedded Collisions: This is the tactic of taking an article/content from a high-profile site like Wikipedia and rewriting it with different words to try and game the system. But LLMs vectorise it (turn the meaning into embeddings) and will see that it contains the same information. If an LLM wants to use the content, it is most likely to choose the more credible and popular website. You must include something new or unique if you want LLMs to cite or mention you.

If you’re not the source, influence the sources that are

Getting your website to become the source is not always going to work. So analyse the sources that appear for the prompts or questions that your audience asks. Which of these can you influence?

- Editorial sources: target your PR to focus on those media outliets (obviously it’s not that easy – PR takes effort and knowing how to create stories and insights the media will use. It is not a ‘gimme’).

- LinkedIn: LinkedIn content is a popular source for B2B content within LLMs, especially Linkedin Pulse articles.

- Corporate websites: if the sources you come across are corporate websites you need to get creative. You could consider partnering with them and offering to write content about each other?

- YouTube: YouTube content is regularly picked up in AI searches. If you are creating video for YouTube the whole transcript is available to LLMs. The LLMs do not see/understand what is actually going on or being said in the video. The title and description is most important.

- Reddit: while it is among the top sources – especially for the US – Reddit is difficult to influence and most subreddits hate commercial content. So you need to be smart and cautious.

- Ecommerce sites: while retail media ads served by ad servers do not get used by LLMs, retailers sometimes offer paid blogs as part of the ad package. And these sometimes get used by LLMs in their answers.

Shortcuts that work now (but probably won’t last)

Malte provided a rundown of easy hacks that people are using to game the LLMs. They work today, but might not work longer term.

- Self-referencing Listicles

Self-referencing listicles in which companies list themselves as among the top suppliers in their category still regularly get picked up by LLMs. For example if you use a prompt like ‘the best CRM software’ you will see examples of articles from CRM providers listing themselves.

In fact, in one B2B category, Peec AI found that 42% of the most cited listicles are self-referencing.

Listicles like this are often a match for LLM fan out queries, which means they can work really well. And, even if you as a buyer notice that a company is using this tactic for blatant self-promotion – if you see their name listed in a’ best of’ list, you might still go and check out what they offer.

To make it a bit more credible, some companies are doing listicle exchanges (a bit like link exchanges in SEO) where they agree to post listicles featuring each other. So not as blatant, but still gaming the system.

Listicles are an easy and cheap tactic – and businesses are spamming it a lot. Malte suggests that the LLMs will likely clamp down on it. Already, it’s becoming less helpful in ChatGPT than in Perplexity and AI overviews. But for now it’s continuing to work (and I’ve just tested for B2B software categories and seen listicles on various LLMs).

- Updating the dates

LLMs have a recency bias. So companies are finding that they can maintain LLM visibility by continually updating the dates on their content without actually refreshing the content.

Right now Malte suggests that if you just go through blogs and articles and just change the dates, it will work to improve your visibility. But eventually the LLMs will probable catch up on it, so it could be a risky tactic.

(NB: The more sustainable approach is to update g the content as well as the dates by adding a fresh summary or highlighting new information at the top of an article) .

BTW: Malte made the point that the New Year period could be an interesting time for those who are benefiting from recency bias. Some companies are already writing blogs with tites like ‘The best X, Y or Z product in 2026’. So, will all the 2025 articles immediately get dropped on January 1st for those with 2026 dates? Or will it take time for LLMs to realise and respond?

- Copying competitors

If competitors are being cited, some companies have seen success by pushing the competitors content into an AI content generator tool and publishing it on their own sites. It works for now but it’s risky and unlikely to last for too long.

- Using the power of X/Twitter to featured in Grok

As well as grounding from content from across the web, Grok relies heavily on content from X. In fact, even content from a single post on X has been shown to show up in answers on Grok.

Conclusion: trusted content will outlive the short-cuts in AI search

As AI systems mature, they will increasingly rely on trusted, verifiable, high-quality information. The power is going to shift back to companies that invest in their reputation whether its sharing genuinely expert content, PR and community building.